We sold Nimrod in July 2018, with the plan to move towards chartering boats rather than owning one. We had a terrific plan for 2020, with skiing in Iran in March, a road trip in Scandinavia followed by a two week charter with friends out of the Norwegian Lofoten Islands in June, and another cruise following the wake of Alfred Russel Wallace in Indonesia in October.

Lofoten Islands

Political tension in Iran scotched the ski-trip, and Covid-19 put paid to the other two trips.

Bruce and Anne Stewart had been planning to go to Norway with us. They have bought into a syndicate of a Seawind 1160 (sistership of Nimrod) called Antidote. They very kindly offered for us to cruise with them to bring their boat south, from Hamilton Island in the Whitsundays, part of the way back to its home port in Brisbane.

The meteorology of the Australian East coast goes like this. January to March carries the risk of cyclones. Best to stay south of the Tropic of Capricorn (Rockhampton). From April to September, South East trade winds predominate. Great for travelling north; hard to travel south.

In October, the SE trade winds become less reliable, and some North East winds kick in, roughly 50/50 with the SE winds.

The implication of this is that cruising yachts often head south in October and November to use the northerlies to get out of the range of cyclones, which start to arrive in November and December.

This is the theory. But in practice you can't rely on this pattern. One year we waited and waited for the northerlies and they never arrived.

Bruce sensibly avoided making any promises about getting us to a specific jumping off point by a particular date. Might be Mackay, might be Yeppoon, if the northerlies cooperate.

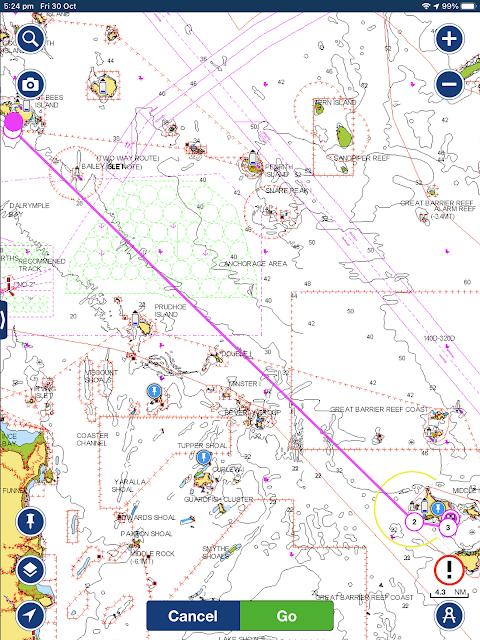

And they did. Gentle 10-15 knot north east winds arrived on cue as we flew into Hamilton Island on October 21st, and carried us south through the southern Whitsunday Islands to Middle Percy and the Shoalwater Bay Training Area, a wonderful remote wilderness area which is occasionally closed for military exercises.

Thursday Oct 22nd. From Hamilton Island down to St Bees Island. 45 nautical miles. New buoys are helpful.

Anne

Friday Oct 23rd. An early start for the jump to Middle Percy Island. 67 nautical miles.

Bruce, lit by the sunrise.

Sunrise leaving St Bees

Whites Bay, Middle Percy Island.

Saturday Oct 24th, down to Island Head Creek. 48 nautical miles.

Antidote, in Island Head Creek

Drone's eye view

Off for a walk on the extensive beach.

Two nights in Island Head Creek, then a leisurely cruise down via Pearl Bay to Port Clinton. The coastline here is particularly beautiful.

Girls getting exercise

Some storm activity around

Sv Margot, a German boat whose world cruising owners had been affected by Covid travel restrictions. They lent it to their son Harald to cruise with his Peruvian fiancée Leydi.

Some are pretty big.

You have to be careful not to collect them from protected zones.

Watching the weather, we sailed on south to Keppel Bay marina at Rosslyn Bay, near Yeppoon. A storm hit after we were safely tied up.

George and I rented a car and drove back up to the Whitsundays, while Bruce and Anne sailed out to the Great Barrier Reef.

In Airlie Beach, we chartered another Seawind 1160 'Sea Dragon' from Whitsunday Escape.

More perfect weather, and no particular place to go. Very relaxing.

We visited Stonehaven Bay, Blue Pearl Bay and Butterfly Bay.

Butterfly Bay

Butterfly Bay

Thousands of flying foxes heading for Whitsunday Island at sunset

Goanna

Sea Eagle

Clouds from Mays Bay